Great Expectations

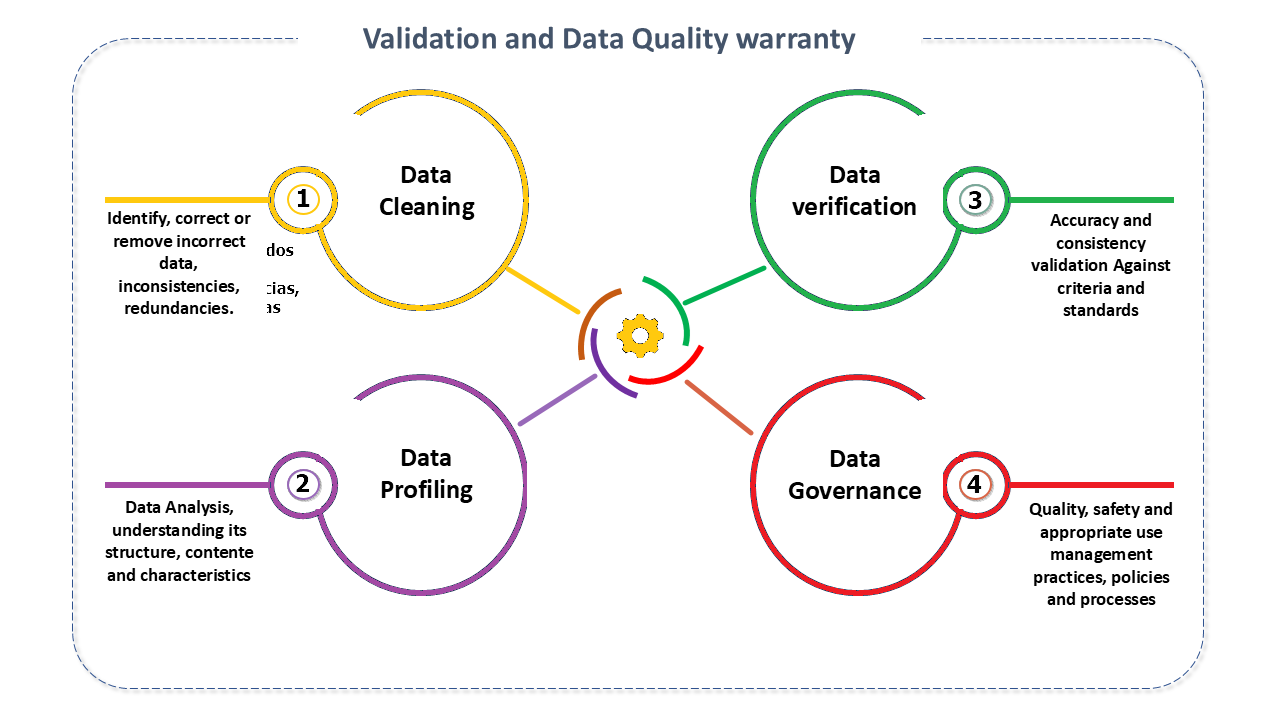

Data Validation and Quality Assurance

In the context of Big Data and data pipelines, data validation plays an essential role.

It is a carefully planned process to ensure that the data collected or processed meets previously defined standards, criteria and formats.

Before moving on to analytics, reporting, or machine learning models, data goes through a rigorous "fine-tooth comb," ensuring its quality and consistency. This validation is a crucial checkpoint, as incorrect data can lead to disastrous decisions, misguided analysis, and, in the case of regulated organizations, even legal implications.

The importance of this process goes beyond simply identifying errors. It is the foundation that ensures organizational trust in your data.

Relying on data that hasn't been verified—simple errors like missing values or inconsistencies between tables can compromise entire results. In addition, automating the validation process reduces manual intervention, making it more efficient and preventing bottlenecks in complex pipelines. When done correctly, it also ensures compliance with regulatory requirements and internal policies, indispensable in industries such as healthcare, finance, and technology.

Features of Great Expectations

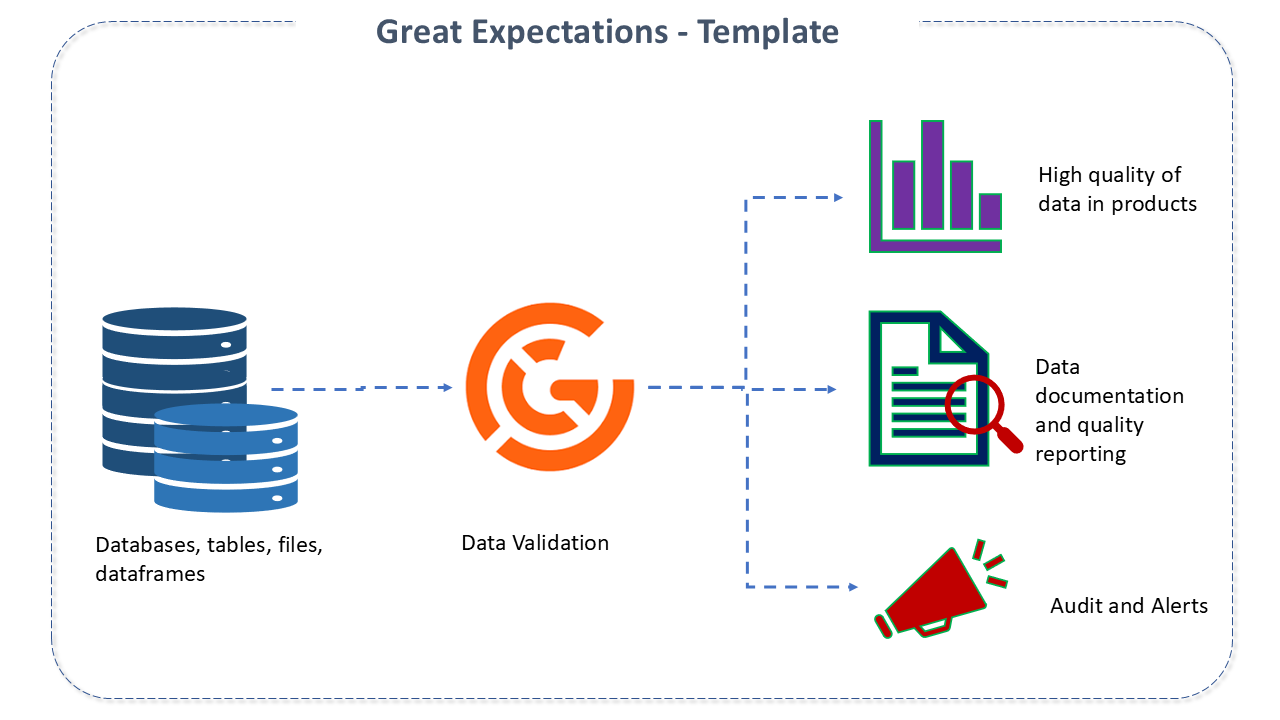

Great Expectations (GX) is an open-source tool designed to make the entire process of validating and ensuring data quality more accessible, automated, and efficient. It solves challenges faced by data science and engineering teams when dealing with complex pipelines, such as a lack of visibility into data quality and difficulties integrating continuous checks into workflows.

At the heart of Great Expectations is the concept of "expectations." These expectations are essentially rules that define how data should behave. They can be as simple as "this column must not contain null values," or as sophisticated as "the values in this column must be in a range between 0 and 100, with an expected average of 50."

The flexibility to define specific rules makes GE a powerful tool to meet diverse data validation needs.

One of the most outstanding features of the tool is its ability to generate interactive reports, known as Data Docs. These reports not only document the quality of the data, but also allow for a visual analysis of the validations performed, facilitating communication between technical and non-technical teams. They are ideal for promoting transparency in organizations where data quality is critical.

Great Expectations Architecture

The architecture of Great Expectations is designed to be modular and adaptable. It is composed of three main elements:

-

Expectations: Represent the rules that the data must meet. For example, "the values in the 'age' column must be between 0 and 120."

-

Validation Results: Indicate whether or not data meets expectations, providing detailed compliance metrics.

-

Data Docs: These are automatically generated interactive reports that provide a clear and accessible view of the quality of the validated data.

These components work together to create a workflow that begins with setting expectations, goes through data validation, and culminates in generating reports that document and monitor data quality over time.

Great Expectations Philosophy

The philosophy of Great Expectations is simple and transformative: to enable organizations to define and validate what they expect from their data in a structured and reusable way.

It formalizes these expectations in a configurable format, often represented in JSON, allowing for easy maintenance and integration with other tools.

With a catalog of more than 70 types of predefined expectations, GE covers most data validation needs.

In addition, it is possible to create personalized expectations for specific cases, ensuring flexibility and scalability.

Great Expectations Features

Great Expectations offers a number of features that make it stand out as a robust solution for data validation:

-

*Broad Data Source Support: Supports CSV files, Parquet, relational databases, and data lakes.

-

*Local or Cloud Execution: Flexibility to run in different environments.

-

*Simple and intuitive setup: User-friendly interfaces to set and manage expectations.

-

*Active Community: Constant updates and support from the community.

Great Expectations is more than a validation tool; It is a milestone in the evolution of data quality assurance, offering clear solutions to complex challenges.

Details of Project Great Expectations

Great Expectations is built on Python, taking advantage of the language's popularity and versatility to make it easier to integrate into data pipelines. Python is widely used by data scientists and engineers, making GE accessible and easy to adopt.

Sources: