Apache Ambari

Cluster Management

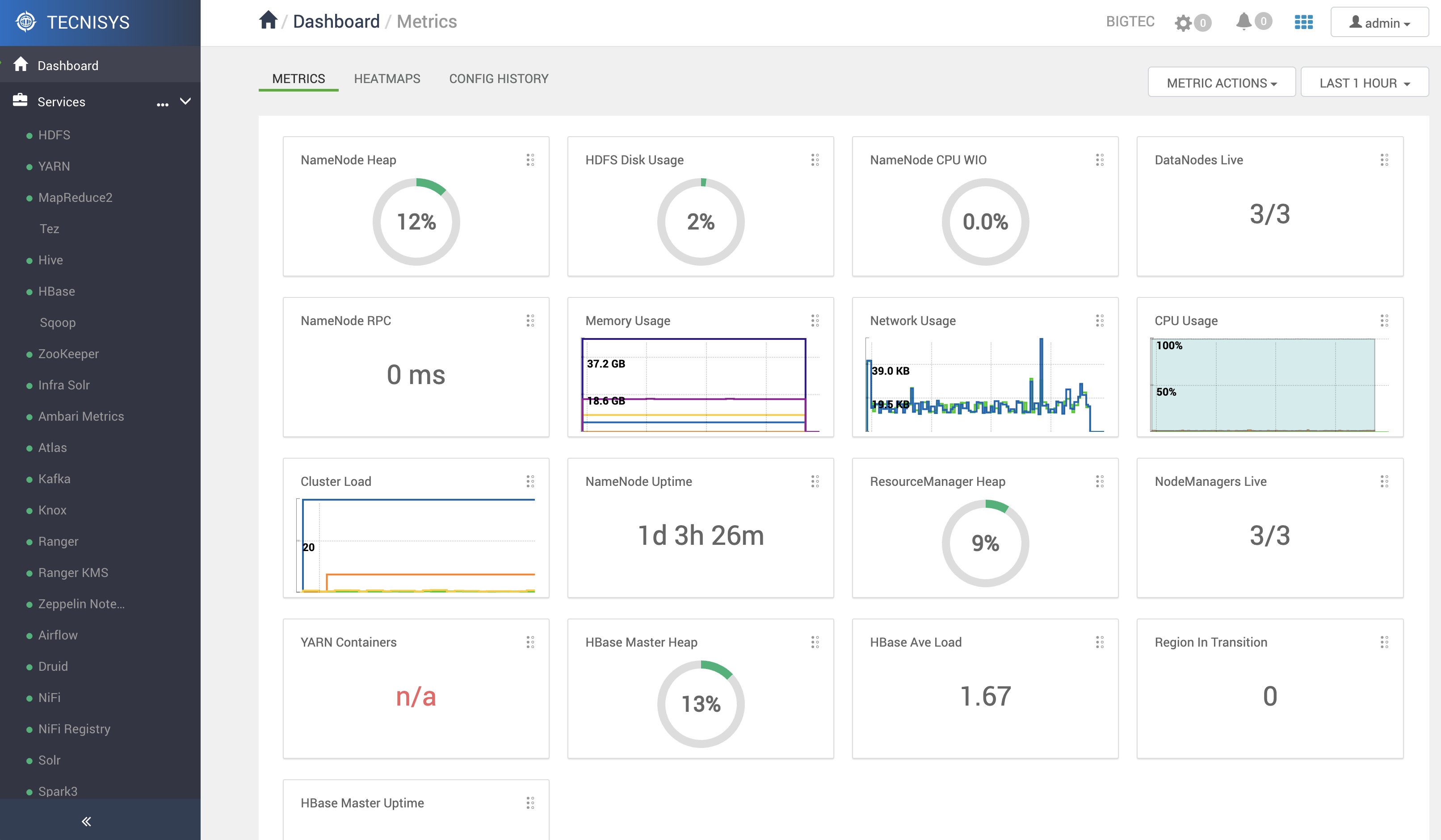

Developed to simplify the deployment and administration of Big Data Clusters, the Apache Ambari enables automated deployment, management of services and hosts, system monitoring of the environment, configuration versioning, and much more.

Its consistent architecture, robust REST API, and intuitive and interactive web interface provide all the necessary resources for centralized cluster administration.

Currently, the Apache Ambari project is thriving thanks to an active and renewed community. You can follow this constant evolution in its Github repository.

Objectives

Among its main objectives, conceived at the beginning of the project, the following stand out:

- Independent Platform : The system must architecturally support any hardware and operating system. Components that are inherently platform-dependent must be pluggable with well-defined interfaces.

- Pluggable Components: The architecture should not assume specific tools and technologies. Any specific tools and technologies should be encapsulated by pluggable components. The architecture should be easily extensible. The goal of pluggability does not cover the standardization of protocols between components or interfaces to operate with third-party implementations.

- Version and Update Management: Components running on multiple hosts must support different protocol versions to support independent updates. Updating any Ambari component should not affect the Cluster state.

- Extensibility: The architecture must support the addition of new services, components, and APIs. Extensibility also implies ease in modifying any configuration or provisioning steps for Service Platforms. Additionally, the ability to support different Service Platforms needs to be considered.

- Failure Recovery: The system must be able to recover from any component failure to a consistent state. The system should attempt to complete pending operations after recovery. If certain errors are unrecoverable, the failure should still keep the system in a consistent state.

- Security: Security implies 1) authentication and authorization based on user profiles (API and web interface), 2) installation, management, and monitoring of the Service Platform protected via Kerberos, and 3) authentication and encryption of network communication between Ambari components.

- Error Tracking: The architecture should strive to simplify the fault tracking process. Failures should be propagated to the user with the details and indicators necessary for analysis.

- Intermediate Feedback and Near Real-Time Operations: For operations that take a while to complete, the system needs to be able to provide feedback to the user with intermediate progress regarding the current execution of tasks, percentage of operation completed, and reference to the operation log, in a timely manner (almost in real-time).

Terminology

Following are the meanings of the technical terms of the Apache Ambari project used in this documentation:

-

Service: Service refers to the Services Platform services, such as HDFS, YARN, Spark, Kafka, among others. A service can have multiple components (e.g. HDFS has NameNode, DataNode, etc.) or be just a client library (e.g. Sqoop has no daemon service, just a client library).

-

Component: A service consists of one or more components. For example, HDFS has 3 components: NameNode, Secondary NameNode and DataNode. Components may be optional. A component can span multiple nodes (for example, instances of the DataNode component on multiple nodes).

-

Node (Node or Host): Does not refer to a machine (physical or virtual) in the Cluster. Node and host are used interchangeably in this documentation.

-

Operation: An operation refers to a set of changes or actions performed on a cluster to satisfy a user request or to obtain a safe state change in the Cluster. For example, starting a service or running a smoke test are operations. If a user request to add a new service to the Cluster includes the execution of a smoke test as well, the entire set of actions to fulfill the user request will make up an Operation. An operation can consist of multiple ordered “actions”.

-

Task: Task is the unit of work sent to execute on a node. A task is the work that the node has to perform as part of an action. For example, an “action” may consist of installing a DataNode on node N1 and installing a DataNode and a Secondary NameNode on node N2. In this case, a “task” for N1 will be to install a DataNode and the “tasks” for N2 will be to install a DataNode and a Secondary Namenode.

-

Stage: A stage refers to a set of tasks performed to complete an operation and are independent of each other. All tasks in the same stage can be performed on different nodes in parallel.

-

Action: An "action" consists of a task or tasks on a machine or group of machines. Each action is tracked by an ID and nodes report its status at least at the action granularity. An action can be considered a step in execution. In this documentation, a stage and an action have a one-to-one correspondence unless otherwise specified. An action ID will be a bijection from request-id to stage-id.

-

Stage Plan: An operation typically consists of multiple tasks on multiple machines and they often have dependencies that require them to be executed in a specific order. Some tasks must be completed before others can be scheduled. Therefore, the tasks required for an operation can be divided into several steps, in which each step must be completed before the next stage, but all tasks in the same stage can be scheduled in parallel on different nodes.

-

Manifest: The manifest refers to the definition of a task that is sent to a node for execution. The manifest must completely define the task and must be serializable. The manifest can also be persisted to disk for recovery or logging.

-

Role: A role maps a component (e.g. NameNode) or an action (e.g. HDFS rebalancing, HBase smoke test, etc.).

Architecture

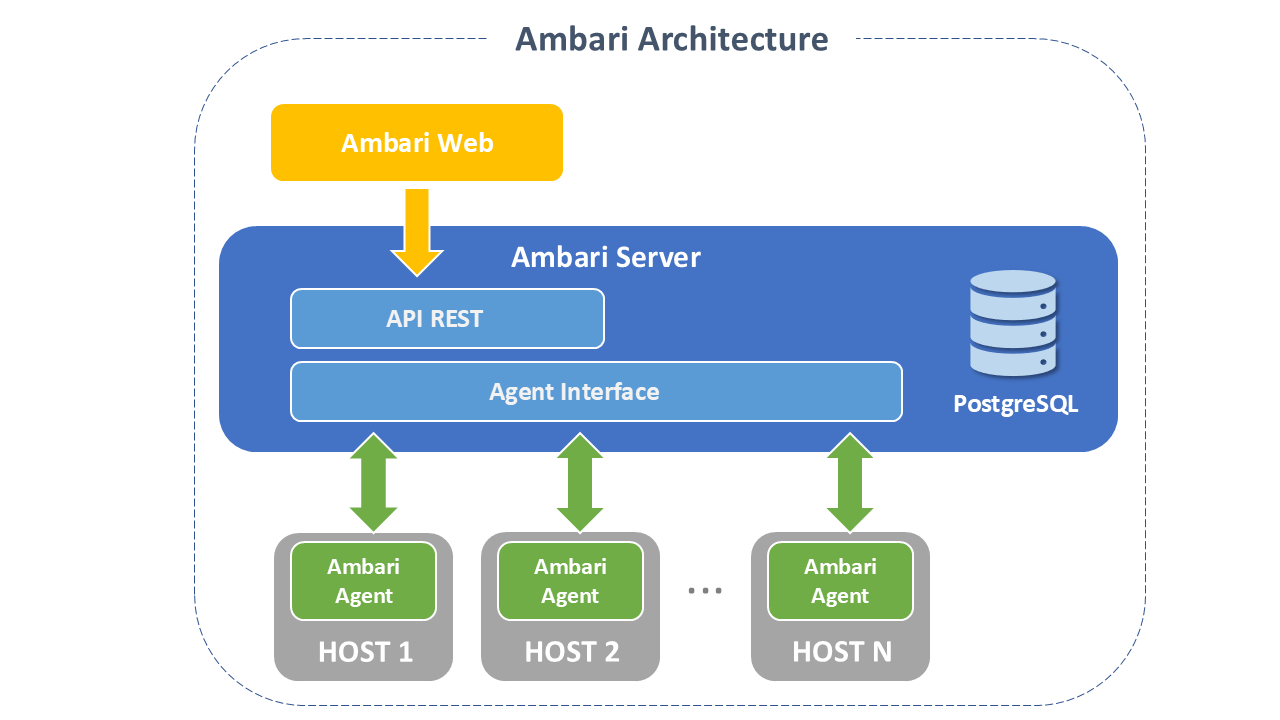

The Ambari architecture involves:

- Ambari Web: A client-side application responsible for the web interface that displays information and performs operations on the Cluster through the REST API provided by Ambari Server.

- Ambari Server: The Master component responsible for Cluster control. It consists of several entry points available for different needs, such as:

- Daemon Management: Entry point for starting, stopping, resetting, and restarting the Ambari Server daemon.

- Software Update: Entry point for upgrading Ambari Server after installation.

- Software Configuration: Entry point for preliminary Ambari setup.

- Authentication and Authorization Configuration: Entry point for setting up the identity management, authentication, and authorization mechanism. Available options: LDAP (Lightweight Directory Access Protocol), PAM (Pluggable Authentication Module), and Kerberos.

- Backup and Restore: Entry point for performing backup (snapshot) and restore of the current installation, except for the database.

- Ambari Agent: Slave component responsible for executing actions and sending information and metrics from a given host.

- Database: Persistence area for the state, metrics, and metadata of the Cluster infrastructure (services and hosts). Ambari supports several Relational Database Management Systems (RDBMS), such as PostgreSQL (default), MySQL, MariaDB, Oracle, and Microsoft SQL Server, the choice of which is made during the first setup of Ambari Server.

Languages

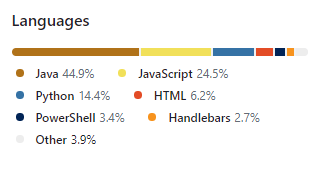

Ambari is predominantly developed in Java, JavaScript, and Python.

Sources: