Apache Atlas

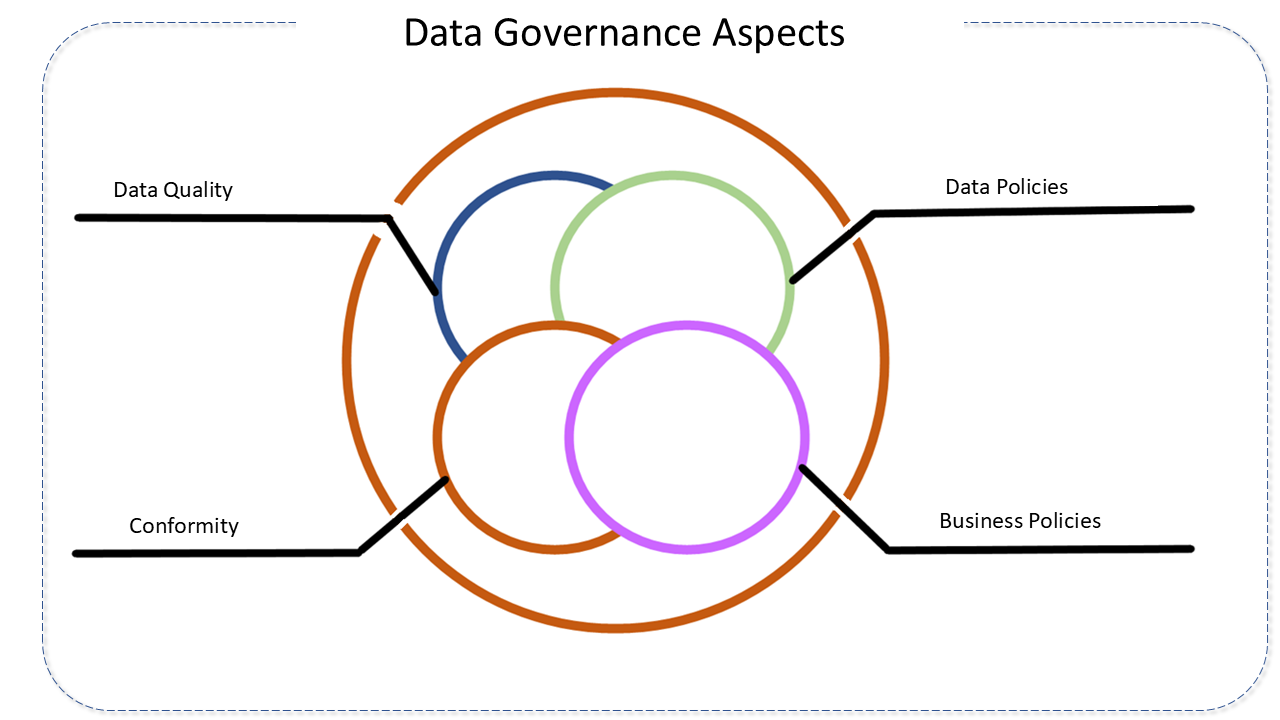

Data Governance

In response to the intense search for value and speed in decision-making by modern organizations, the market increasingly offers technologies, environments, and components to be consumed and explored with "real-time," "near-real-time," "streaming," and other strategies that allow data transformation and access to information in the shortest possible time.

Although this analytical evolution adds high capacity to digital transformations and optimizes technology parks, it demands from organizations a structured "foundation" and high maturity in maintaining processes and assets.

This is where Data Governance comes in, a fundamental process that ensures alignment with the compliance of organizations, improving the quality of insights, assisting in the adoption of regulatory compliance, reducing costs with centralized policies and systems, growing controlled and organized data, protecting them from any setbacks, within a cultural environment favorable to innovation and permeating the entire technology pipeline through which data will navigate.

Apache Atlas Features

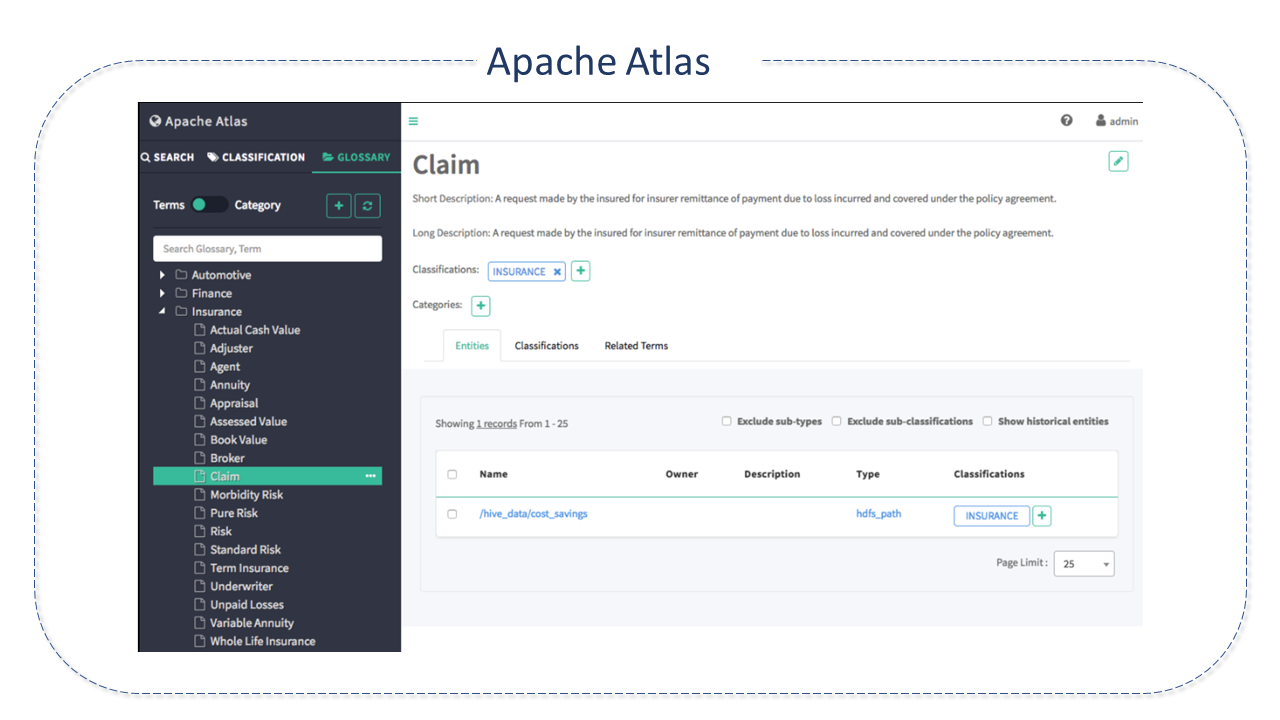

Apache Atlas is a scalable set of core governance services provided for Hadoop, aiming to assist organizations in meeting their compliance requirements. For this, it uses prescriptive and forensic models enriched by business taxonomic metadata.

Apache Atlas allows organizations to build a catalog of their assets, classifying, managing, and providing collaboration capabilities for their use by data scientists and governance teams.

The tool was designed to exchange metadata with other tools and processes inside and outside the Hadoop stack, thus allowing platform-independent governance controls to effectively meet compliance requirements.

These services include:

- Search and prescriptive lineage: facilitating predefined and ad hoc exploration of data and metadata and maintaining a history of data sources and how specific data were generated.

- Metadata-driven data access control.

- Flexible modeling of business and operational data.

- Data classification: assisting in understanding the nature of data and its classification based on external and internal sources.

- Metadata exchange with other metadata tools.

Apache Atlas Architecture

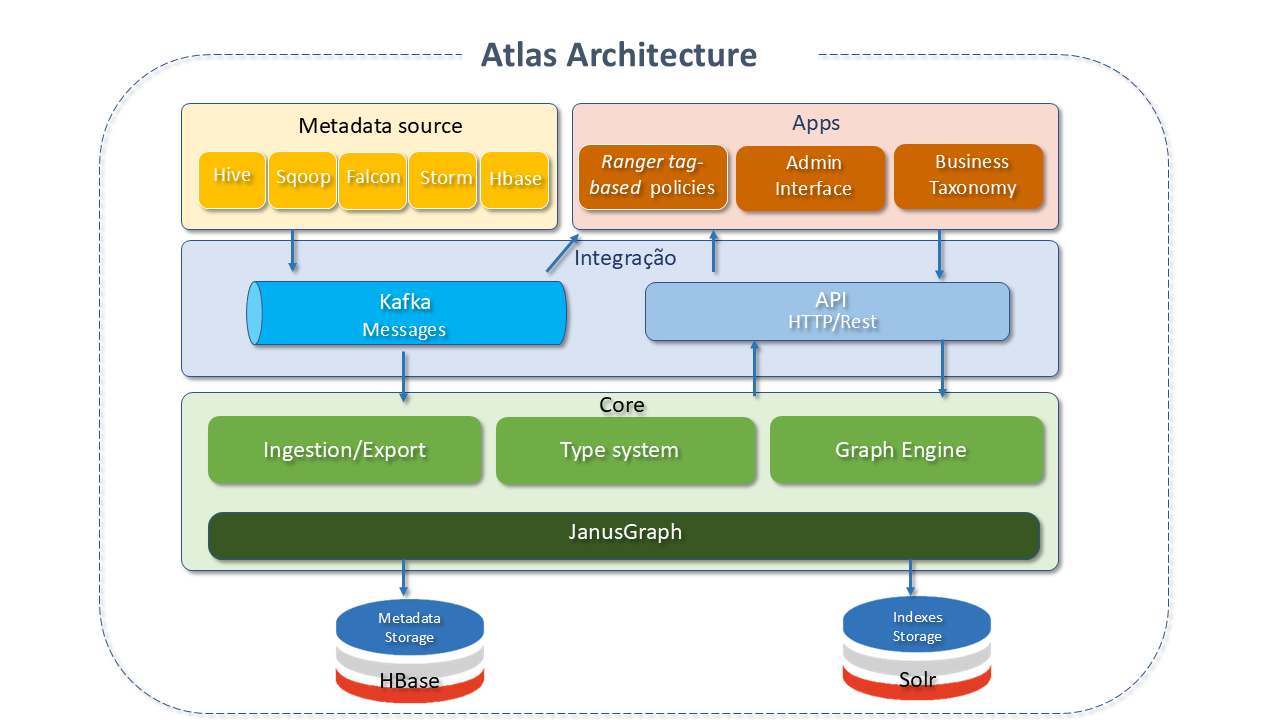

The architecture of Apache Atlas involves the following components:

Core

-

Type System: Allows the definition of a model for metadata objects that you want to manage.

- The model is composed of definitions called types.

- The instances of the types are called entities and represent the metadata objects that will be managed.

- All metadata objects managed by Atlas in the out-of-the-box (ready-to-use) model are modeled using types and represented as entities.

- The generic nature of modeling in Atlas allows integrators and data administrators to define technical and business metadata and relate them through Atlas features.

-

Graph Engine: Internally, Atlas persists the metadata objects it manages using a Graph model. This approach provides great flexibility and enables efficient manipulation of relationships between metadata objects.

- The Graph engine is responsible for the translation between types and entities of the Type System and for the underlying graph persistence model.

- The Graph engine also creates indexes for metadata objects so that searches can be performed efficiently.

- Atlas uses JanusGraph to store metadata objects.

-

Ingestion/Export: The ingest component enables the addition of metadata in Atlas, and the export component makes detected changes in metadata available to be generated as events and consumed by Consumers in response to changes in metadata in real-time.

Integration

There are two methods to manage metadata in Atlas:

-

API: All Atlas functionalities are made available to the end-user via REST API, which enables the creation, modification, and deletion of types and entities. This is the main mechanism for querying and discovering the types and entities managed by Atlas.

-

Messaging: In addition to APIs, there is the possibility of integration with Atlas using the messaging interface.

- This interface is very useful for communication between metadata objects for Atlas and to consume metadata change events from Atlas. It is particularly useful when you want to use a more flexible integration to achieve more scalability, reliability, etc.

- Atlas uses Kafka as a notification server for communication between hooks and downstream consumers of metadata notification events. Events are written by hooks and Atlas to different Kafka topics.

-

Metadata Sources

Atlas supports integration with several metadata sources, ready to use. Integration implies two things:

- There are metadata models that Atlas natively defines to represent objects of these components.

- There are components provided by Atlas to ingest metadata objects from these components (real-time or batch).

Currently, it supports the ingestion and management of metadata from the following sources:

- HBase

- Hive

- Sqoop

- Storm

- Kafka

- Falcon

Applications

The metadata managed by Apache Atlas is consumed by a variety of applications.

-

Admin UI: This web-based component enables administrators and data scientists to discover and "annotate" metadata.

- It is a search interface with an SQL-like query language that can be used to query the metadata types and objects managed by Atlas.

- The Interface uses the Atlas REST API to create its functionality.

-

Ranger Tag-Based Policies: Ranger is an advanced security management solution for the Hadoop ecosystem with broad integration with a variety of components.

-

By integrating with Atlas, it allows security administrators to define metadata-driven policies, aiming at effective governance.

-

It is a Consumer of metadata change events notified by Atlas.

Apache Atlas Resources

-

Knowledge Base: Leverages existing metadata. Supports metadata exchange with other components, third-party applications, or governance tools.

-

Centralized Auditing: Indexed and "searchable" storage from a historical repository of all governance events, including access, grants, denials, operational events related to data provenance, and metrics.

-

Policy Engine: Runtime compliance policy based on data classification schemes, attributes, and roles.

-

RESTful Interface (compliant with REST criteria): Support for third-party applications through REST APIs.

-

Lineage:

-

Intuitive UI to visualize data lineages as they move through processes.

-

REST APIs to work with types and instances.

-

-

Integration with Ranger:: Integration with Apache Ranger enabling data authorization/masking on data access, based on classifications associated with entities. It can be used to implement dynamic classification and role-based security policies.

-

High Availability and Fault Tolerance: Apache Atlas uses and interacts with a variety of systems to provide metadata management and data lineage for data administrators. These dependencies must be configured appropriately to achieve a high degree of availability. The community describes this support in detail here.

-

Classification Propagation:: Apache Atlas enables classifications for entities to be automatically associated with other related entities. This is very useful when dealing with scenarios where a dataset derives its data from other datasets.

-

Business Metadata:The Atlas type system allows the definition of a model and the creation of entities for metadata objects that you want to manage. The model captures technical attributes such as name, description, creation date, number of replicas, etc. This is very useful for expanding technical attributes with additional attributes from capturing business details to assist in organizing, searching, and managing metadata entities.

-

Security: The following security resources are available to help secure the Platform:

- Support for one-way SSL (server authentication) and two-way SSL (client and server authentication).

- Service Authentication: The Platform, upon startup, is associated with an authenticated identity. By default, in an unsecured environment, this identity is the same as the user authenticated in the OS to start the server. However, in a secure cluster that uses Kerberos, it is recommended to configure a Keytab and Principal so that the Platform is authenticated in the KDC. With this, the service will interact with other secure cluster services.

- SPNEGO-based Authentication: HTTP access to the Atlas Platform can be protected by enabling SPNEGO support on the platform. There are currently two supported mechanisms:

- simple: authentication is performed through a provided username.

- Kerberos: the client's authenticated KDC identity is leveraged to authenticate to the server.

-

Repair Index: In rare cases, during entity creation, the corresponding indexes are not created in Solr. As Atlas relies heavily on Solr this would result in the entity not being returned by a search (advanced search is not affected). The Atlas Index Repair Utility for JanusGraph allows the restoration of all indexes.

-

Atlas Server Entity Type: The AtlasServer entity type is a special type of entity:

- Created during Export or Import operations.

- Has special property pages that show detailed audits for export import operations.

- Entities are linked to it using the new option within the SoftReference.

-

Replicated Attributes: Through the attributes of the Referenciável entity type replicatedFrom and replicatedTo, it is possible to know how entities arrived at the Apache Atlas instance, whether they were created by hook ingestion or imported from another instance.

-

SoftReference: The attribute persistence strategy is determined based on its type. The isSoftReference attribute option set to true causes a non-primitive attribute type to be treated as a primitive attribute.

-

- Classification-based access controls: A data entity can be marked as metadata related to compliance or a specific taxonomy and used to assign permissions to a user or group.

- Data expiration-based access policy: Expiration dates to automatically deny access to tagged data after the specified date.

- Location-specific access policies: Access to a user while in a particular location but not in another, even though it is the same user.

- Prohibition of dataset combinations: Restriction based on datasets (for example, to prevent them from being combined).

Detalhes do Projeto Apache Atlas

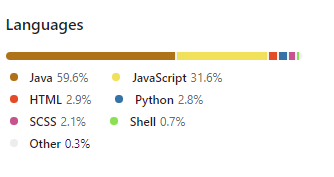

Apache Atlas was developed predominantly in Java. It has Dashboard components in JavaScript.

Fonte(s):