High Availability

High availability is the ability of a system, component, or application to remain operational even after the failure of one or more of its elements. The goal is to ensure continuous operation, minimizing downtime and maximizing service reliability.

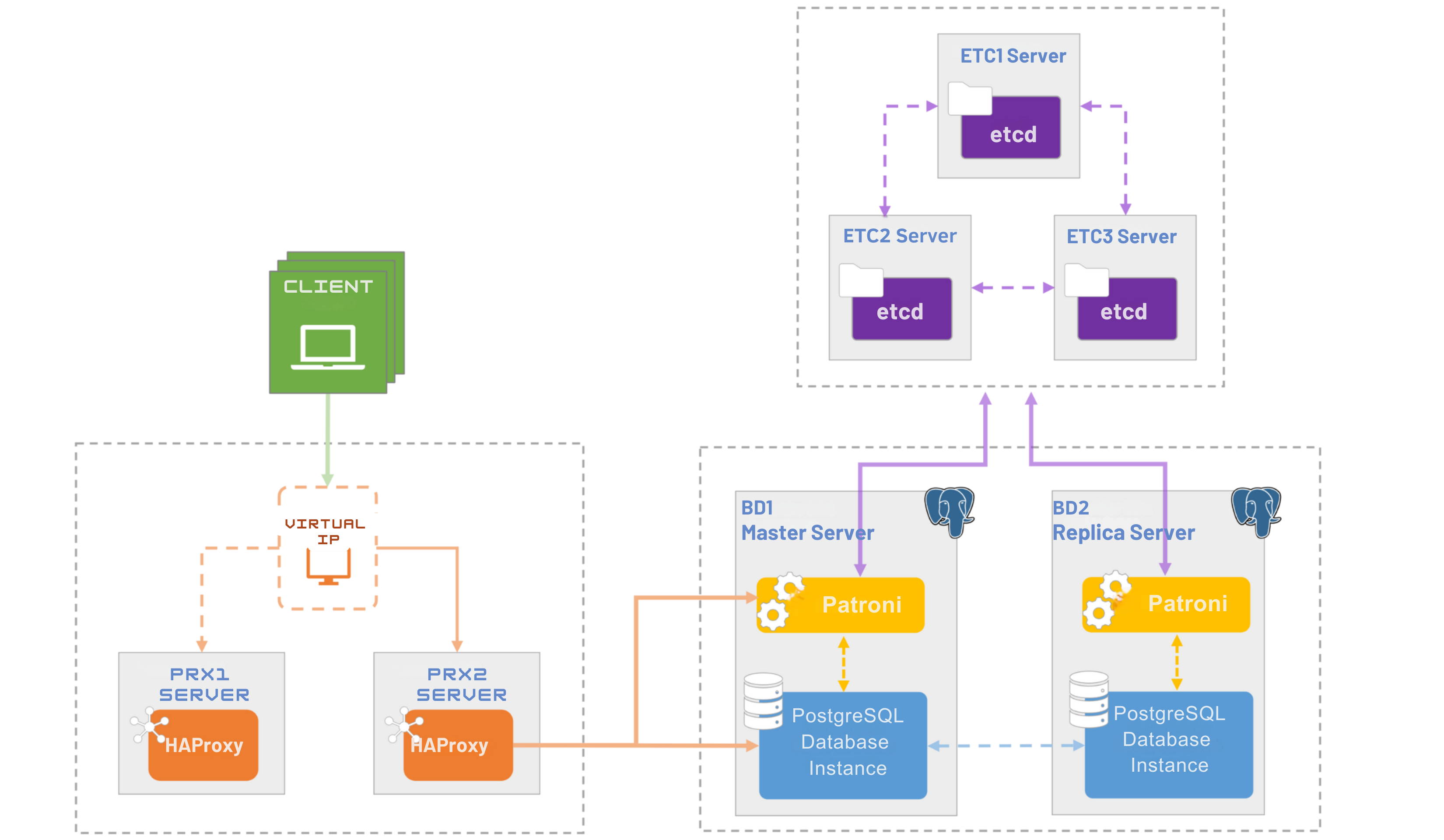

PostgreSYS is designed to be robust and resilient, keeping database services accessible in the event of hardware or software failures. The platform integrates components that enable automatic failover, reverse proxying, efficient replica management, and more.

Using PgSmart, it is possible to define High Availability Environments, composed of a primary database instance (leader) and one or more replicas (synchronous or asynchronous).

Patroni

Patroni, the High Availability Agent of the PostgreSYS Platform, plays a crucial role in ensuring the continuity of database services, especially in mission-critical environments.

One of the most widely adopted high availability managers in the PostgreSQL community, Patroni is an open-source project that provides robust features for automating and orchestrating database clusters. Its key capabilities include:

- Automatic Failure Detection and Recovery: Automates the failover process by detecting failures in primary instances and promoting replicas, thereby minimizing downtime.

- Distributed Consensus: Supports multiple backends for consensus and distributed cluster configuration storage, including etcd, Consul, and ZooKeeper.

- API-first Design: Offers a REST API for managing and querying cluster state, simplifying integration with monitoring tools, load balancers, and automation platforms.

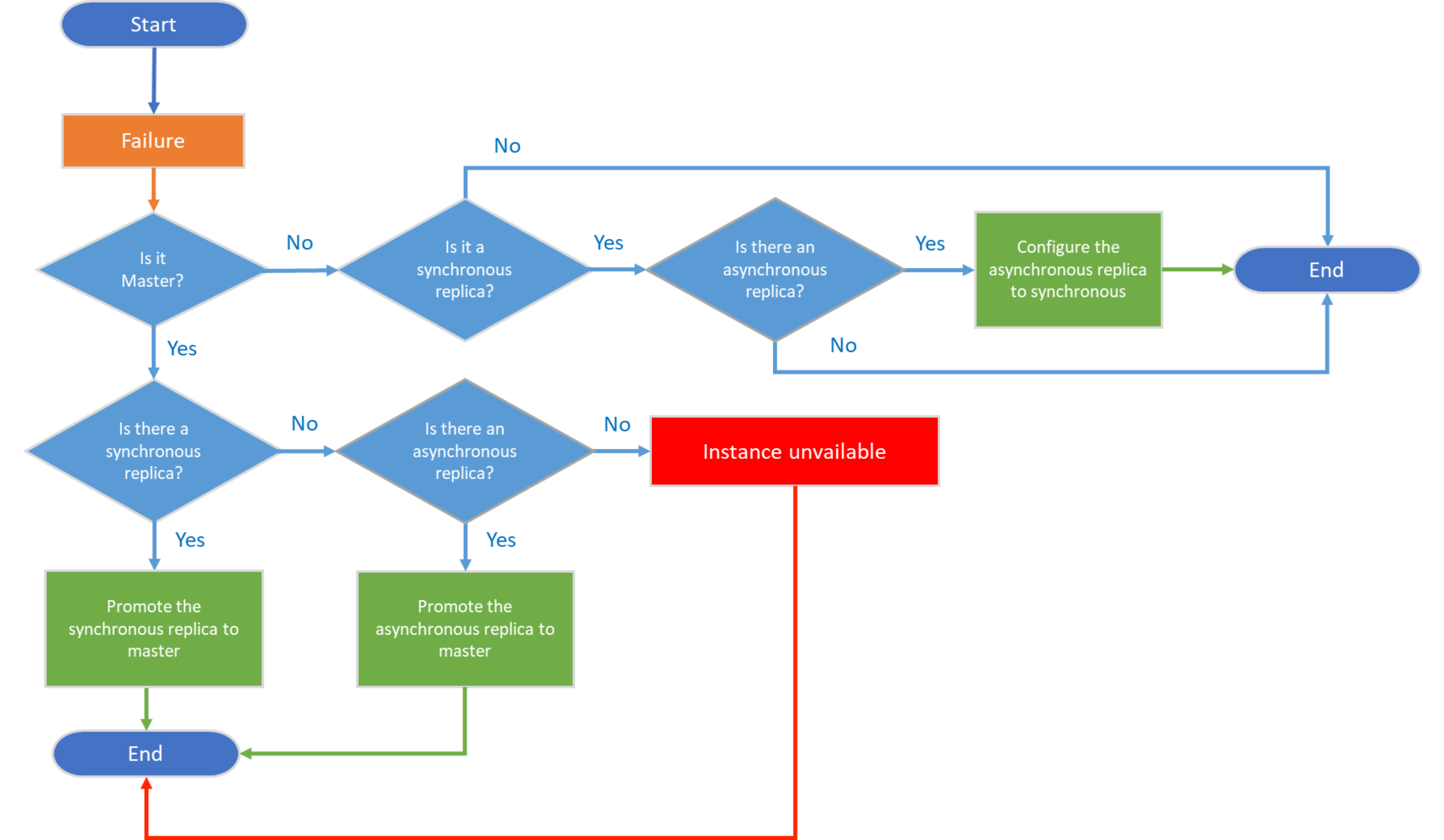

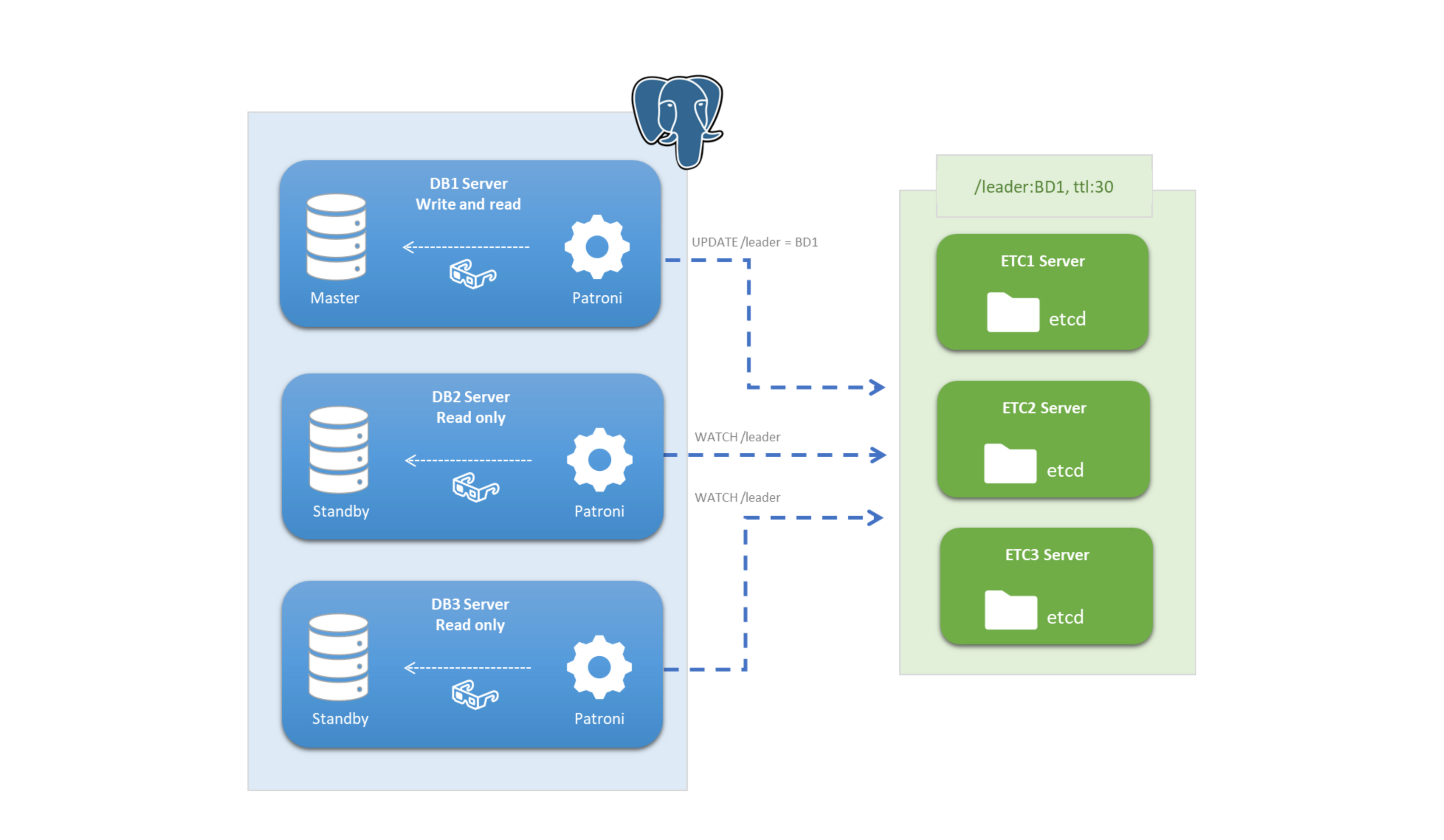

In a High Availability Environment, Patroni continuously monitors the health of PostgreSQL instances, using a Distributed Configuration Store (DCS) for leader election and cluster state management. If the master instance fails, Patroni identifies the best candidate replica—based on replication delay and node health—and promotes it. The DCS is then updated, and all remaining replicas are reconfigured to follow the new primary.

The diagram below illustrates possible Agent actions in case of failure within the High Availability Environment.

In addition to failover management, Patroni supports scheduled switchovers and dynamic configuration changes, offering a comprehensive solution for maintaining PostgreSQL clusters in high availability.

In a High Availability environment, it is essential to install the High Availability Agent on every machine that hosts a PostgreSQL database instance.

etcd

etcd, the Distributed Configuration Store (DCS) of PostgreSYS, enables High Availability Agents to reliably and consistently share configuration and state information—crucial for maintaining the integrity and availability of instances.

An open-source project initiated by CoreOS, etcd provides a dependable key-value storage model, specifically designed for critical distributed systems. By using the Raft consensus algorithm, it ensures data consistency and availability even during partial network or component failures.

As a curiosity, the name "etcd" combines the familiar UNIX directory /etc, which stores configuration files, with the letter "d" for "distributed."

Key features include:

-

Consistency and Fault Tolerance: Uses the Raft algorithm to manage replication and ensure that all cluster members agree on the current system state.

-

Simple User Interface: Provides an HTTP/HTTPS API for easy and programmatic read/write operations.

-

Observability: Supports watches, allowing services to dynamically respond to configuration or state changes.

-

Security: Includes client authentication, access control for keys, and encrypted communication via TLS.

-

Performance and Scalability: Optimized for speed and capable of handling high data loads with low latency.

etcd is built for high availability and fault tolerance, typically running in a multi-node cluster where each member replicates data and participates in Raft consensus to validate changes before commitment.

For mission-critical environments, it is recommended to configure an etcd cluster with an odd number of members (3, 5, 7, etc.) to ensure that the system can tolerate the failure of up to (n-1)/2 members without compromising data availability or consistency.

HAProxy

HAProxy, the High Availability Proxy Service of PostgreSYS, acts as an intermediary between client applications and the database, directing connections to the master instance based on each server's health and load.

Widely recognized for its efficiency in TCP/HTTP load balancing, HAProxy functions as a high-performance reverse proxy for various applications. It stands out for:

- Flexibility: Supports multiple load balancing algorithms, including round robin, least connections, and source hashing.

- Security: Implements robust security measures such as process isolation, privilege reduction, and traffic filtering to protect against attacks.

- Performance: Designed for high performance, capable of handling thousands of concurrent connections with low latency.

- Monitoring and Logging: Provides detailed logs and real-time metrics for performance monitoring and analysis.

The architecture of HAProxy is optimized for fast and efficient processing of network connections. It operates on an event-driven model that handles multiple connections in parallel, maximizing hardware resource utilization.

- Multithread Processing: Distributes workload across multiple CPU cores,increasing processing capacity and efficiency.

- Isolation and Security: Uses isolation techniques such as chroot jail and sandboxing to improve operational security.

- Dynamic Balancing: Dynamically adapts to changes in the health of back-end servers, adjusting traffic distribution as needed.